TL;DR

- In an engagement we found an open directory on the internet belonging to our client

- By enumerating it we found a zip archive with a configuration file holding usernames and passwords

- That file gave us access to the client’s ArcGIS instance

- This contained a treasure trove of information about the client’s AD and Office 365 environment

- Automated pen testing would never have found this

Introduction

Late last year we were doing an external infrastructure test. Ask any penetration tester and they’ll probably tell you that these sorts of assessments are usually straightforward. Run vulnerability and port scans, do your OSINT, try some slightly wacky stuff using a cool new tool, write the report over your 4th coffee and call it a day.

This is a story of that process, but with a twist at the end. It serves as a lesson that pen testing can never be automated. An automated test could never have joined the dots and use the ArcGIS instance and Active Directory connection as a vector.

Discovery

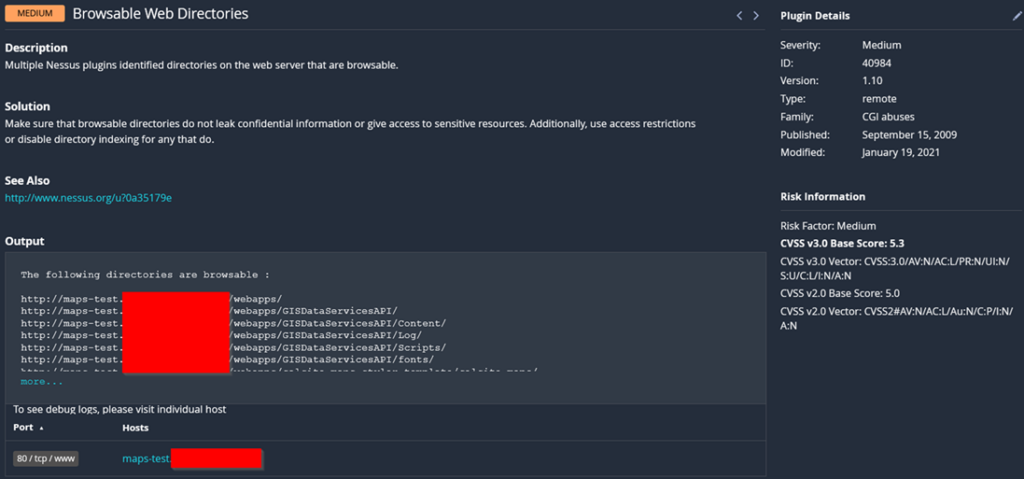

Our scope was simple: Here’s a list of domain names and IP addresses, go off and see what you could break. Skipping the majority of the reconnaissance, Nessus eventually discovered a promising open directory on a test subdomain for us to nose around in.

Figure 1: /webapps directory found by Nessus

Open web directories on the public internet, while not recommended, are fairly common. Although Nessus reported that there are accessible files, it’s likely that it’s test data that probably isn’t worth spending too much time on, right? Especially considering the sub-domain was called maps-test.

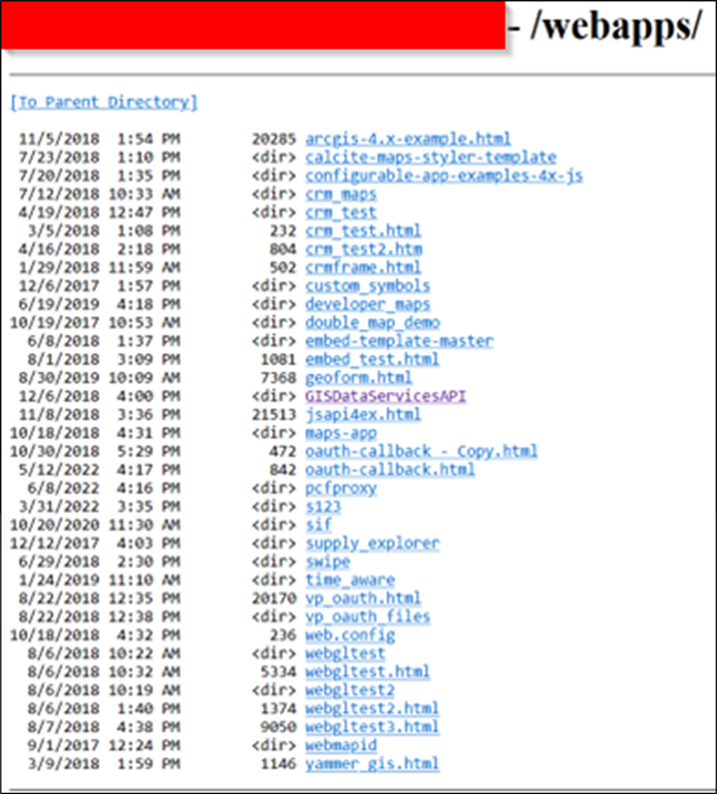

Figure 2: Root of the directory listing

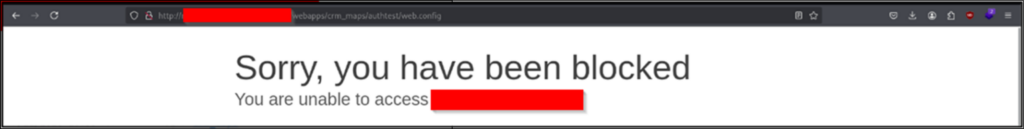

Right away the web.config file here is sticking out, which can often hold credentials, file paths and other data that we get excited about. Unfortunately (for us), Cloudflare stopped us in our tracks when we tried to access it:

Figure 3: The Cloudflare page you get when trying to view the file

Points for Cloudflare on that one. Fair enough, it looks like we’ll need to start digging into some of these directories.

Enumeration

The vast majority of files in the open directory appeared to be various testing and pre-production applications. Why they were stored openly we’re not sure, but we couldn’t find any obvious production secrets in the directory, which was a relief for the client. Indeed, Figure 2 shows that some of the directories have been around since 2017, so our initial thoughts were that no live secrets would be present for us to use, and that the open directory had simply been forgotten about.

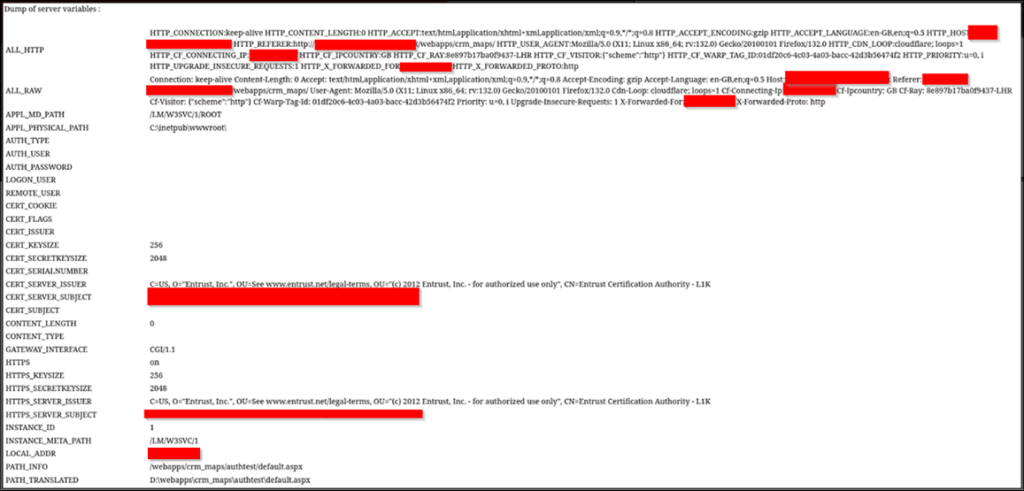

That’s not to say there weren’t any files in the dump useful to an attacker. After a few short minutes of searching, we found a page that was dumping server information out to us:

Figure 4: Server variables on the public share

Again, no juicy API keys or other secrets in the environment variables but local IP addresses, file paths and operating system information are worth noting.

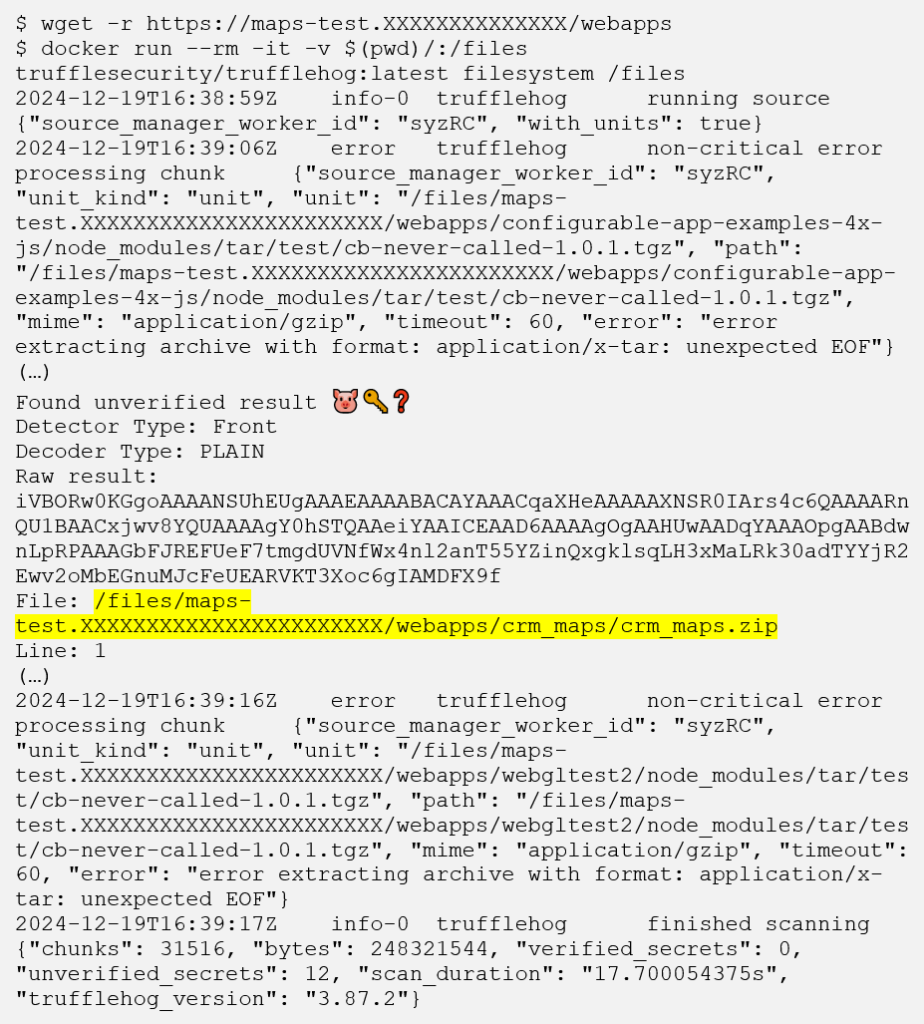

It quickly became obvious that searching through the entire 500MB+ directory wouldn’t be feasible within the assessment timeframe, so we started looking into options for ways to search through it quickly for interesting stuff. One of those ways was the TruffleHog tool, which searches for secrets in a local or remote file structure using a set of regexes. Among many false positives it flagged, this alert seemed interesting to us:

The output above might seem boring (and it is), but it does give us a clue on the sort of filetypes we should be prioritising. The errors around unexpected EOF in the logs seemed to suggest that TruffleHog had trouble scanning archive files (e.g. .zip and .tar.gz).

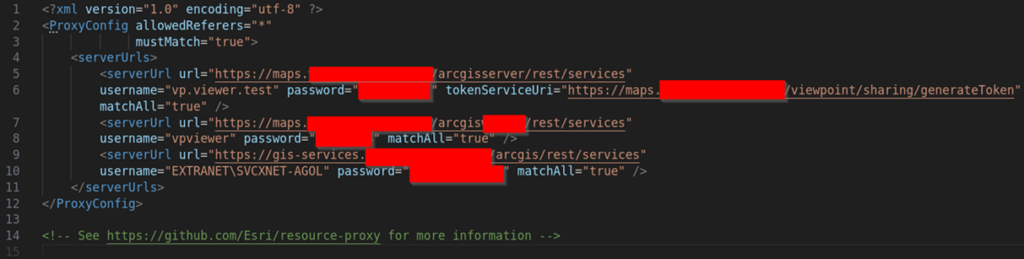

Extracting the problematic archive and running TruffleHog again didn’t reveal anything different, however a proxy.config file in the root of the unzipped archive did actually contain credentials and even links to the services they are used for:

Figure 5: Plaintext usernames and passwords in proxy.config file

ArcGIS

ArcGIS is a solution to connect various data sources together in order to display them as part of an interactive map. In our case, the client was using it to aggregate reports across their estate for data analysis. It’s also possible to connect it up to active directory for easier user and permission management. Pretty useful for any potential attacker!

Using the credentials above, we can access some parts of their ArcGIS estate:

Figure 6: Successful authentication to ArcGIS REST service client

vpviewer was disabled and EXTRANET\SVCXNET-AGOL was likely for an internal domain user. We did try to authenticate to office 365 with some likely email addresses of these accounts but no luck.

With no external domain services and office 365 authentication unsuccessful, we focused our efforts on the vp.viewer.test user within ArcGIS itself.

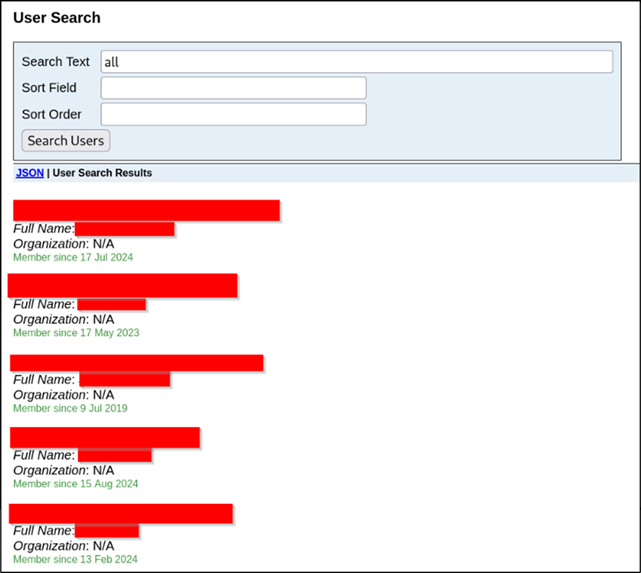

We were able to view a fair amount of employee and business data, as shown below:

Figure 7: Extensive search and viewing of any user within the client’s office 365 tenancy

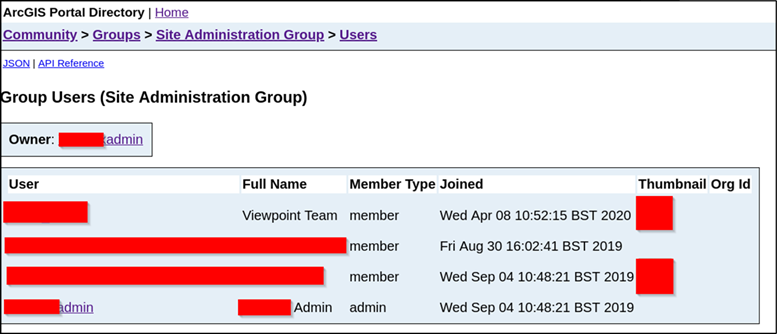

Figure 8: Viewing an administrator user’s details. Some email addresses were also revealed here

Figure 9: Enumeration of groups. Note that some users’ full names, email/usernames and profile pictures were revealed

If you were expecting some scary, wild story about how we compromised the client’s tenant through this account sadly you’ll be disappointed. Since it was a production system that was highly likely to be critical to the stability of the client’s, they were understandably not keen for us to try and make any changes to it. At the bottom of Figure 8 for example, there is some dangerous functionality that may have led to an account takeover, but this was not attempted due to fears of disruption throughout the client’s network.

Reaction

Interestingly, at the time of writing, TruffleHog does not support the detection of proxy configuration files for old ArcGIS projects. These are now deprecated thanks to ArcGIS’s implementation of proxy settings within the app itself. However, since the project was only discontinued in April 2023, working credentials for existing, exposed ArcGIS applications may still be present on the open internet.

We were assured by the client that secret scanning was in place to detect the exposure of credentials. However, as we saw above, scanning alone cannot cover include a regex for every format of credentials ever made and gaps are inevitably found. A feature request has since been raised to add support for proxy credentials but this is just one tadpole in a big pond.

Upon being made aware of the issue and recommended the best course of remediation, the client took the identified open web directory offline and changed the exposed credentials.

Conclusion

We should mention that other than this issue, our assessment against this client showed that their external infrastructure posture was well-matured and consisted of several layers of defence against common attacks.

However, our assessment also showed that forgotten or misconfigured services can hold sensitive secrets that remain live long into the future. While the impact of this can be limited in nature, even the disclosure of information can lead to a more serious breach of internal resources. Our recommendations for mitigating any impacts in this kind of scenario are clear:

- Conduct regular security audits, both from an internal and external perspective

- Ensure the segregation of production and non-production environments is iron-clad

- Use multi-factor authentication wherever possible

- Store credentials within dedicated secrets managers and away from hard-coded configuration files where possible.

No Comments yet!