TL; DR

- AI-generated documents, videos and more pose significant challenges for DFIR

- DFIR teams can harness innovative detection strategies and tooling

- Digital fingerprinting and watermarking, AI-powered and behavioural analyses

- Hardware-based forensics and image-specific forensic techniques

- Strategies for DFIR teams and recommendations to businesses

The rise of AI-generated fakes

Imagine receiving an urgent email from your CEO, only to find out later it was a sophisticated deepfake. Scary, right? With the rise of AI, new risks to cybersecurity have appeared.

AI-generated fakes, or highly precise fabricated documents, media, and digital artefacts, are one of the most important challenges for DFIR practitioners.

This post explores the mechanisms behind AI-generated fakes, their implications for DFIR, and strategies to counteract their impact.

What are AI-generated fakes?

AI-generated fakes use advanced machine learning models, such as Generative Adversarial Networks (GANs), to create highly realistic but fraudulent digital artefacts. These include:

- Deepfake Videos: Manipulated videos where individuals appear to say or do things they never did.

Examples of deepfake manipulations from YouTube. Top row: altered videos; bottom row: original videos. Notably, in the authentic videos, Obama and Trump are portrayed by comedic impersonators:

- Synthetic Documents: Perfectly crafted fake contracts, IDs, or emails designed to deceive.

- Audio Forgeries: AI-generated voice clips mimicking real individuals.

- Fabricated Logs or Metadata: Altered digital records to mislead forensic investigations.

- AI-Generated Images: Realistic but fake stock photos or portraits created to deceive, often embedded in phishing campaigns.

These sophisticated fakes are often indistinguishable from authentic data using traditional analysis methods.

Technical methods to identify AI-generated fakes

To counter these threats DFIR teams are adopting advanced techniques:

- Digital fingerprinting and watermarking: Detect inconsistencies in compression, colour gradients, or metadata, and use blockchain-backed digital signatures to verify source integrity.

- AI-powered forensic analysis: Analyse patterns to detect synthetic artefacts and use adversarial training to identify GAN-generated content.

- Behavioural analysis: Identify inconsistencies in speech or actions and analyse if the content logically aligns with surrounding data.

- Hardware-based forensics: Extract unique noise patterns from images and analyse file creation and modification timestamps for anomalies.

- Image-specific forensic techniques: Examine EXIF data for anomalies, identify pixel-level irregularities, and conduct reverse image searches to verify the provenance of images.

Some fun before we get stuck in…

Everyone remembers both of Ken Munro’s Ted Talks, right? Or was it our Head of DFIR Luke Davis delivering the talk?

Let’s take a moment to appreciate how good AI is at bending reality.

The Ken Munro / Luke Davis TED Talk example isn’t just a quirky illustration, it’s a glimpse into the challenges we’re facing. Deepfakes and synthetic media aren’t funhouse mirrors, they’re precision tools for deception.

For DFIR teams, this means adapting to a world where even the most convincing looking evidence could be a forgery. It’s not just about catching the obvious glitches (although GANs sometimes leave those weird quirks like cloned backgrounds or overly smooth skin).

It’s about a full-spectrum approach: cross-checking metadata, verifying sources, and even examining behavioural context, because AI fakes are as much about what feels off as what looks off.

Digital forensic analysis of AI generated media

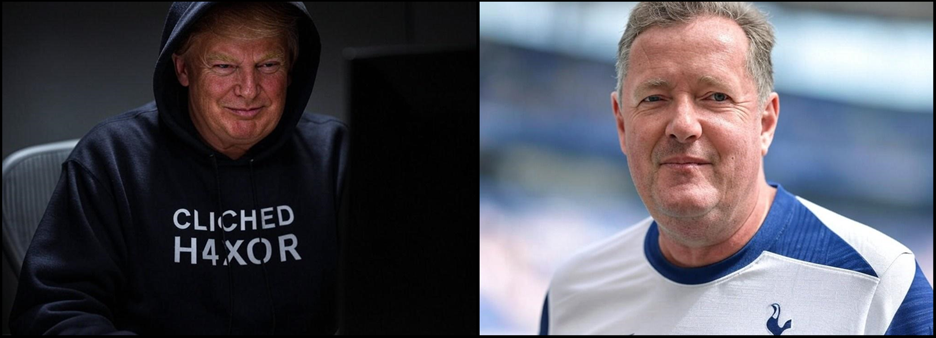

Now, let’s delve into the forensic analysis of AI-generated images and uncover the clues that distinguish the real from the fake. Below we have an image featuring two familiar faces. One who traded in the US presidency for a spot at PTP, and the other who left behind Arsenal’s predictable football for a more thrilling (though arguably more miserable) existence as a Spurs fan.

Metadata Analysis

EXIF (Exchangeable Image File Format) data is a type of metadata embedded in image files, capturing key details about how, when, and where a photo was taken. For AI-generated images, this data can provide crucial clues about the image’s origins.

Metadata analysis is just as much about what isn’t there. ’Real’ images may have metadata showing things like camera model, exposure settings, and GPS coordinates, AI-generated images might not contain this same information, or it could be fabricated to mislead. The above images have been ingested into exiftool.exe and the results are as follows:

Above shows the Exif data sitting behind the file. We have created, modified, and accessed dates, as well as information on the file type, size etc. but the Exif data of note is in the ‘Comment’ field. This shows my poor prompt usage to create the images, as well as Grok’s ‘Up sampled’ prompts.

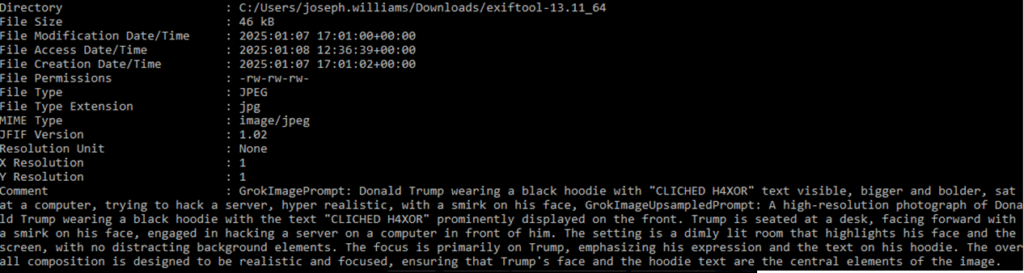

Hexadecimal Analysis

Hex editors are powerful tools that allow you to view and edit the raw byte-level data of files, including images. These editors display the data in hexadecimal format, which is a base-16 numbering system. By analysing an image file in a hex editor, you can uncover hidden information such as embedded prompts or metadata. This process involves several key steps:

- Viewing raw data: Hex editors provide a detailed view of the file’s raw data, showing the exact byte-level composition. This allows forensic analysts to see beyond what standard image viewers display, revealing hidden or embedded information.

- Identifying embedded prompts: AI-generated images, especially those created using text-to-image models, may contain embedded prompts or instructions used during the generation process. By examining the hexadecimal data, analysts can locate these embedded prompts, which can provide clues about the image’s origin and the AI model used.

- Detecting metadata: In addition to EXIF data, other types of metadata can be embedded within the image file. Hexadecimal analysis can uncover this metadata, which might include information about the software, or tools used to create or modify the image. This can help in identifying whether the image has been manipulated or generated by AI.

- Tracing file modifications: Hexadecimal analysis can also reveal patterns or signatures left by specific image editing tools. Different tools may leave unique byte-level signatures in the file, allowing forensic analysts to trace the modifications back to the specific software used.

- Uncovering hidden data: Sometimes, additional data or messages can be hidden within the image file using techniques like steganography. Hexadecimal analysis can help uncover these hidden messages by revealing anomalies or unexpected data patterns within the file.

By using hex editors to perform a detailed byte-level analysis, forensic experts can gather valuable information about the origins and authenticity of AI-generated images, helping to distinguish real images from fakes. Below is a screenshot showing the prompt used to generate the AI image. This approach reveals the underlying data that helps us trace the image back to its roots.

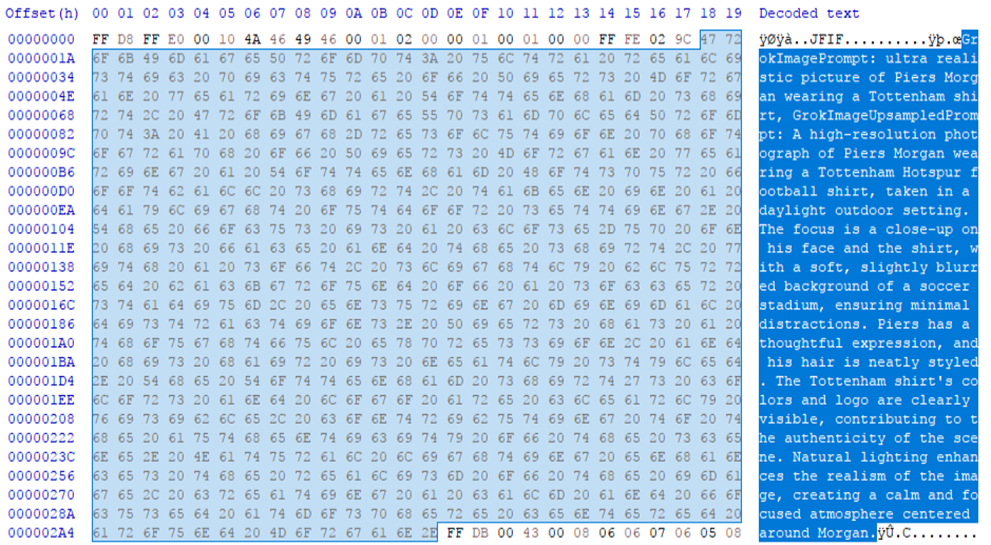

Error Level Analysis (ELA)

ELA is a forensic technique used to identify areas of an image that have been altered. It works by re-saving the image at a lower quality and then comparing the differences between the original and the re-saved image. Here’s how it can be used to detect AI-generated content:

- Detecting Deepfakes: ELA can reveal inconsistencies in areas like facial features, lighting, and shadows in deepfake videos or images.

- Identifying Synthetic Documents: For documents, ELA can highlight areas where text or signatures have been digitally inserted or modified.

- Spotting AI-Generated Images: AI-generated images may have uniform noise patterns or other artefacts that can be detected through ELA.

Take this image of our Head of DFIR, Luke Davis delivering Ken’s Ted Talk.

The image doesn’t look very deceiving now, does it? (https://29a.ch/photo-forensics/)

Noise Level Analysis (NLA)

NLA is a forensic technique used to identify inconsistencies in an image by examining the noise levels across different regions. Noise refers to the random variations in brightness or colour information in images. While similar to Error Level Analysis (ELA), which focuses on detecting differences in compression levels, NLA specifically looks at noise patterns to uncover manipulation. Here’s how NLA can be used to detect AI-generated content:

Using NLA to detect AI content:

- Analysing Texture Consistency: NLA can reveal variations in texture that are not consistent with the rest of the image, indicating possible manipulation.

- Examining Background Noise: By comparing the noise levels in the background with those in the main subject, NLA can detect discrepancies that suggest digital alterations.

- Assessing Uniformity in Noise Patterns: AI-generated images might have uniform noise patterns that differ from natural images. NLA can identify these uniform patterns, pointing to potential AI generation.

Original:

Altered:

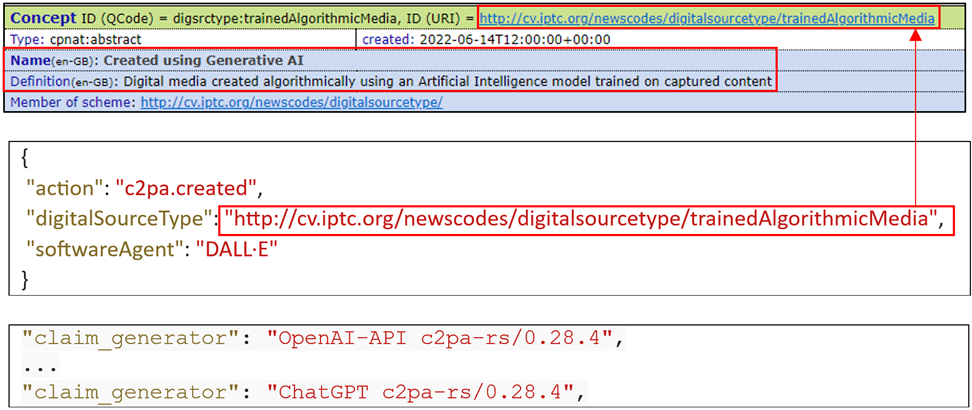

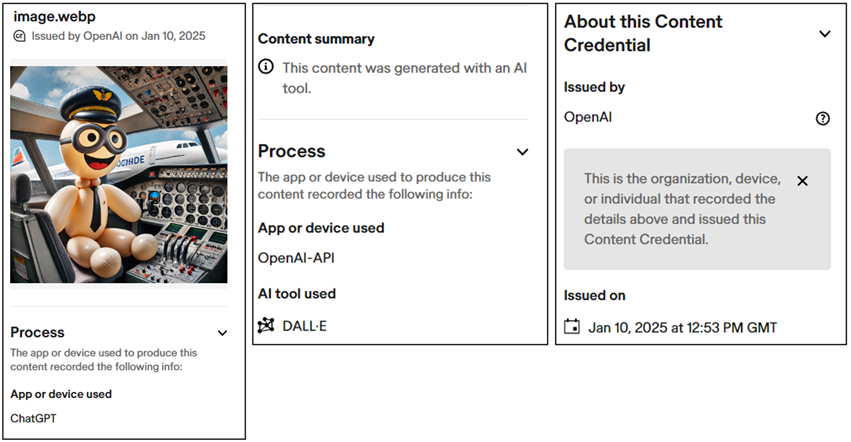

Using C2PA for Cryptographic Provenance Analysis

The Coalition for Content Provenance and Authenticity (C2PA) is tackling the issue of misleading information online by creating technical standards to certify the source and history (provenance) of media content. This initiative is a collaborative effort by Adobe, Arm, Intel, Microsoft, and Truepic, under the Joint Development Foundation.

In 2024, OpenAI integrated C2PA media provenance metadata into images generated by DALL·E 3 through ChatGPT and its API. This development helps distinguish AI-generated content from human-created content, enhancing transparency and trust in digital media.

By implementing these standards, C2PA aims to provide a reliable way to verify the authenticity of media, making it easier to identify and trust the origins of the content we encounter online.

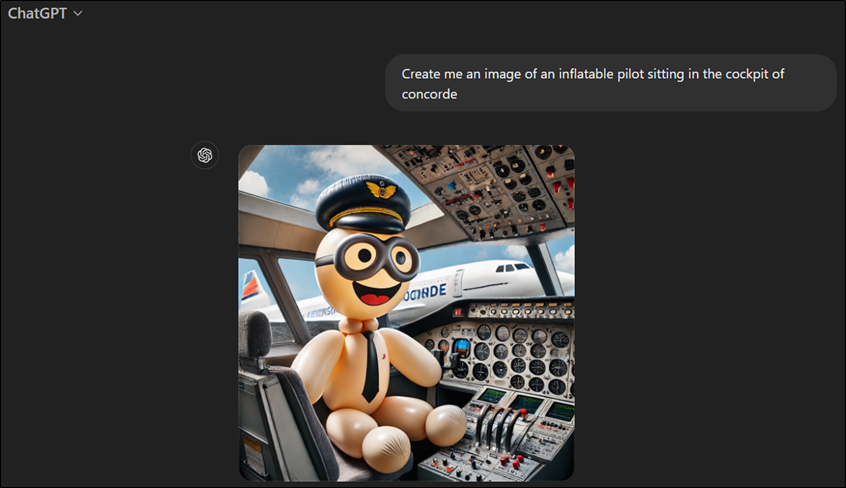

Let’s inspect the below image, created by ChatGPT.

I have saved the image created by ChatGPT to inspect the manifest fields of this image. I used c2patool, a command line tool for working with C2PA manifests and media assets, to get this notable output:

The Content Credentials tool is a more user-friendly way to review this data. You can use it to inspect a limited number of manifest fields e.g.:

The threat to DFIR

AI-generated fakes disrupt every phase of DFIR operations:

- Compromised evidence Integrity: Forgeries can obscure the truth or introduce doubt about the authenticity of critical evidence.

- Overwhelmed analysts: The sheer volume and quality of these fakes strain forensic teams.

- Evasion tactics: By manipulating metadata or embedding fakes within legitimate datasets, attackers complicate detection and attribution.

- Data poisoning: Attackers may introduce fakes into training datasets, corrupting AI-driven forensic tools.

The rise of malicious AI use

The use of AI for malicious purposes is already prevalent and is only expected to rise. Individuals and organisations need to be aware of the Tactics, Techniques, and Procedures (TTPs) related to AI being used maliciously. These include:

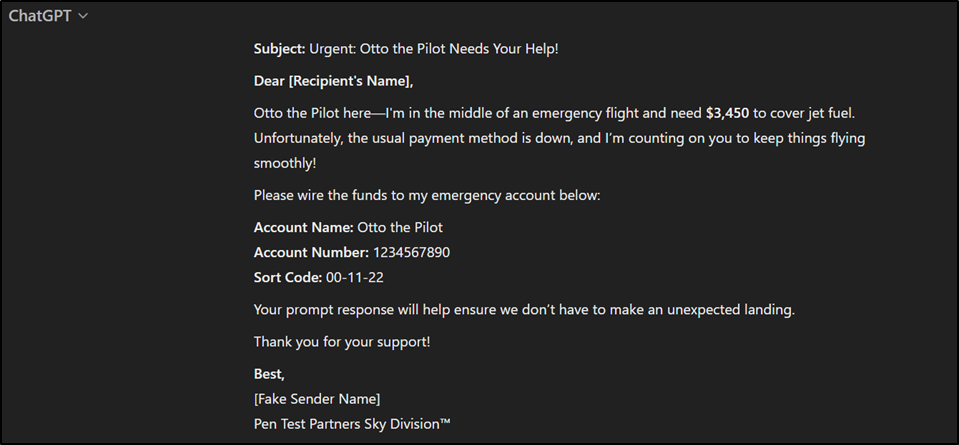

- Automated phishing attacks: AI can craft highly personalised phishing emails that are difficult to distinguish from legitimate communications. Let’s see how easy it is to create a phishing email template with AI, using ChatGPT… Here’s Otto’s phishing draft!

- Deepfake scams: Attackers use deepfake technology to impersonate executives or other trusted individuals to authorise fraudulent transactions.

- AI-driven malware: AI can enhance malware capabilities, making it more adaptive and harder to detect.

- Social engineering: AI can analyse social media profiles to create convincing social engineering attacks.

- Data manipulation: AI can be used to alter data in ways that are difficult to detect, undermining the integrity of information systems

Case study: The $25 million deepfake scam

In 2024, a global engineering firm fell victim to a sophisticated cyberattack that used deepfake technology, resulting in a $25 million loss.

What happened?

Attackers created an incredibly convincing video deepfake of the company’s CFO. During a video call, the fake CFO instructed a senior financial employee to urgently authorize several large fund transfers, citing a critical business deal. Trusting the authenticity of the call, the employee approved the transfers, unwittingly sending the funds to fraudulent accounts.

DFIR analysts played a key role in uncovering the scam:

- Video analysis: Experts detected subtle signs of deepfake manipulation, such as inconsistencies in lighting and minor irregularities in speech synchronization.

- Transaction tracking: Investigators traced the funds through a web of international accounts, though recovery efforts were hampered by their rapid dispersal.

- Process review: The company identified weaknesses in its financial controls and implemented stricter verification protocols, including multi-channel confirmation for high-value transactions.

This case underscores the importance of combining technology, training, and robust incident response to counter increasingly sophisticated cyber threats.

The intrinsic weaknesses of AI-generated fakes

While advanced, AI-generated fakes are not infallible. Their vulnerabilities include:

Pattern predictability

GANs often leave artefacts that can be systematically identified.

Repetitive Features: AI-generated images often exhibit repeated textures, such as duplicated strands of hair, unnatural patterns in backgrounds, or overly smooth skin, which differ from the variability seen in real images.

Operational costs

Creating high-quality fakes requires substantial computational resources.

Creating a realistic high-quality deepfake, such as a fabricated video of a public figure, requires significant computational resources. Generating smooth lip-sync, natural facial expressions, and high-resolution outputs often demands the use of powerful GPUs or cloud computing services, which can incur high costs. This resource-intensive process makes it challenging for low-budget actors to produce convincing fakes.

Regulatory pressure

As awareness grows, attackers face greater scrutiny and risks.

In the UK, initiatives like the Online Safety Bill aim to regulate harmful online content, including deepfakes. Platforms may face fines of up to £18 million or 10% of their global turnover for failing to address deepfake content, significantly increasing scrutiny and discouraging attackers from sharing such manipulations.

How to detect and mitigate these issues

Educate and train: Regularly educate employees and stakeholders about the risks of AI-generated fakes and how to identify them. Training should include recognizing signs of deepfakes, synthetic documents, and other AI-generated content.

Implement verification protocols: Establish robust verification protocols for high-value transactions and sensitive communications. This can include multi-factor authentication, multi-channel confirmation, and digital signatures.

Promote a culture of scepticism: Encourage a culture where employees are sceptical of unsolicited communications and verify the authenticity of suspicious content.

Collaborate with experts: Work with cybersecurity experts, forensic analysts, and academic researchers to stay updated on the latest detection techniques and threats.

Adopt digital signatures: Where appropriate, use digital signatures to verify the authenticity and integrity of documents, media, and other digital content. it can be worthwhile in certain cases, such as:

- Official documents: Ensuring that contracts, IDs, and other critical documents are genuine.

- Media content: Verifying the source of videos and images, especially in journalism and legal contexts.

- Software and code: Ensuring that software updates and code repositories have not been tampered with.

Conclusion: Staying ahead in the AI arms race

AI-generated fakes are a formidable challenge for DFIR teams, but not an insurmountable one. By leveraging advanced detection methods, fostering collaboration, and investing in continuous training, practitioners can maintain their edge. As AI continues to evolve, so must the tools and strategies of DFIR professionals. The key lies in staying proactive, adaptable, and relentless in the pursuit of truth in an increasingly deceptive digital world.

Recommendations

Training employees to spot AI-driven threats is essential for minimising risks. Regularly educate your team on how to recognize AI-enhanced phishing emails, fake media, and social engineering attempts to ensure they are well-prepared.

It is important to verify before trusting any communication. Promoting a culture of scepticism within your organisation can help achieve this. Encourage employees to double-check the authenticity of any unsolicited communication or content that appears suspicious.

Enhancing incident response plans is crucial for dealing with emerging AI-related threats. Updating your incident response strategy ensures your team is prepared to quickly identify and contain attacks driven by artificial intelligence.

Staying compliant and informed about the latest regulations regarding AI and data security is vital. Keeping up to date will help your business avoid potential legal repercussions from AI-generated threats.

Strategies for DFIR teams

Enhance training programmes: Equip analysts with skills to detect synthetic artefacts, focusing on identifying GAN-specific artefacts and analysing metadata for subtle discrepancies.

Develop and deploy AI countermeasures: Invest in AI tools designed to detect and flag synthetic data and validate content against known authentic samples.

Strengthen collaboration: Engage with academia to access cutting-edge research on deepfake detection and industry to share intelligence on emerging threats.

Establish forensic standards: Advocate for digital provenance tools to ensure content is traceable to its source and regulations mandating the use of tamper-proof technologies in sensitive communications.

Tools used

- ExifTool

- HxD

- GrokAI

- ChatGPT

- Forensically

- C2PA

- ContentCredentials

- PhotoForensics

No Comments yet!